Last updated on July 7, 2025

Generative AI in Cloud Workflows: New Levels of Operational Efficiency

Most people, when they think about creativity, associate it with text, images, or music. Traditionally, these are the domains where it was most strongly associated; however, a substantial portion of our work is now managed at significant scale by embedding generative AI into cloud workflows. AI, once regarded as only a creative tool, now functions as an underlying platform for AI tasks. The automation possibilities presented by this convergence of AI and cloud architecture become clear, as organizations push boundaries for smarter and quicker processes. At Blanco Infotech, this shift has been witnessed daily by us. Businesses are not simply demanding faster output—they expect smarter automation made possible by integrating generative models into existing machines. Smarter work, not just harder work, is what is achieved by these new architectures. The need for scalability and adaptability is being driven by enterprise demands that previously were out of reach with traditional automation tools.

The Role of Generative AI in Enterprise Cloud Workflows

Generative AI is now viewed not just as a tool for producing content but also as a solution which yields structured, contextual, and actionable results capable of realizing business objectives so that these outputs are suited for integration directly into digital delivery systems.

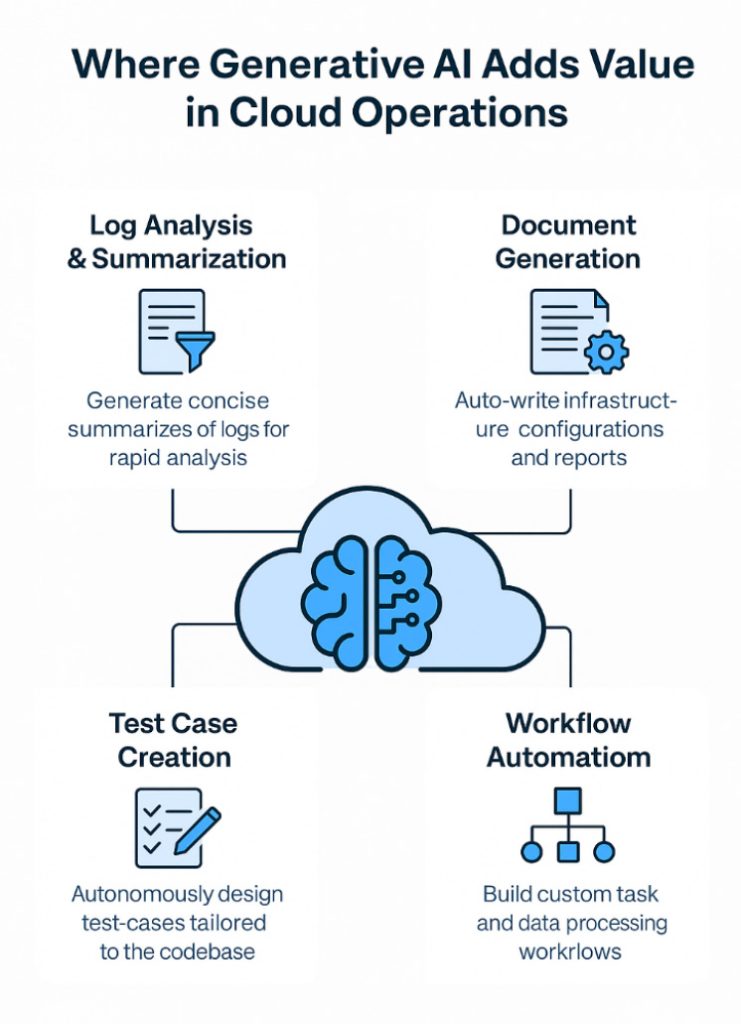

When embedded within cloud-native systems, several outcomes can be delivered by generative AI technologies.

- Technical documentation, release notes, and compliance reports are auto-generated

- Configuration scripts or infrastructure-as-code (IaC) templates receive improvement suggestions from the system

- Creates synthetic test cases for integration pipelines

- Incidents in complex IT systems often require prompt attention and careful analysis in order to maintain service.

- Drafts standard operating procedures based on system behavior

Teams with skills are allowed to spend their time less on repeated activities since automation has been introduced instead. More time can be dedicated by these professionals to complex problem solving and analysis.

Why Now? The Perfect Storm for AI and Cloud Convergence

Several significant shifts have shaped the adoption of generative AI in cloud operations, and these must be evaluated when considering current industry directions because they influence technology integration methods greatly, indicating that further automation may be possible in standard workflows.

- Foundation models, such as GPT-4 and Claude, have increased their accuracy and capability since their architectures have advanced—they can handle tasks once thought too complex for automation. They have been designed to deal with language, images, and even multimodal input, enabling a broad range of possible applications and increasing their utility.

- API-first platforms are being introduced by many cloud vendors, and they have promoted modularity in cloud systems—these systems now expose endpoints that generative models and machines implement on their own, sometimes with little or no human intervention.

- Modernization of cloud infrastructure happens at a faster pace. Operations teams have been allowed by these platforms to link AI-powered automation directly to existing DevOps pipelines.

Use Cases: AI-Enhanced Cloud Workflows in Action

1. Automated Change Logs & Release Notes: All release notes can be auto-generated by DevOps teams, who feed commit messages and ticket references into a large language model to receive well-formatted documentation, thereby reducing manual labor for each sprint. This method introduces consistency in release note structure for every deployment. Manual documentation is no longer needed before every sprint cycle because teams automate this process, which leads to much faster workflows.

2. Generating IaC Deployments Using Automated Setups: Teams have been able to generate less deployment setups; as a result, this strategy lowers the amount of configuration mistakes and reduces the occurrence of deployment rollbacks which use to previously slow down project timelines. It is possible for the code suggestions to be adapted to specific environments. Not all templates fit for all cases.

3. AI-Powered ChatOps for Incident Management: A bot can be queried via chat without entering all logs and alerts manually; for example, someone could simply ask, “What caused the last deployment to fail?” and the answer, which is a real-time summary of logs, metrics, and traces, is generated. Provides immediate feedback to incident responders during stressful events. ChatOps bots summarize information quickly now which earlier had been done manually for every surprising incident by operations staff.

4. Infrastructure Testing and Simulation: Cloud environments are capable of being tested under a variety of AI-generated scenarios, which allow researchers to evaluate system performance and security in a controlled fashion.

Considerations for Adoption: What Enterprises Need to Know

While the possibilities are vast, successful adoption of generative AI requires thoughtful planning:

- Data Privacy & Governance

LLMs depend heavily on the quality and scope of their performance, and substantial improvement can only be found in long-term research. Organizations shouldn’t ignore the risk during system deployment. Sensitive configuration and access tokens can be inadvertently exposed if sufficient precautions are not taken, leading to significant security.

- Model Accuracy and Trust

Even advanced models can produce “hallucinations” in their outputs, where information that was not present in the input data may be present. This phenomenon is often difficult to detect automatically without human intervention.

- Integration with DevOps Toolchains

AI is expected to operate inside the existing ecosystem so that disruption is not caused by it. It also helps technology to integrate more easily. Seamless integration can be achieved if standard protocols are followed and compatibility is kept.

- Cost Awareness

Operating AI models, particularly those that require significant resources for inference, have been seen to cause computer expenses to increase rapidly in large datasets where computational scales and workloads are not distributed efficiently.

Blanco Infotech’s Approach to AI-Cloud Integration

At Blanco, we help enterprises in integrating AI into the areas that matter most — infrastructure, operations, and performance optimization. Our solutions have been focused on connecting AI capability to the reality of using cloud technology.

We offer:

- Secure AI deployment on hybrid/multi-cloud setups

- Prompt engineering for domain-specific automation

- Custom integration of LLMs into CI/CD workflows

- Monitoring tools to track AI accuracy, drift, and usage

Integrating artificial intelligence with observability, automation, and deployment frameworks allows new levels of operational excellence to be achieved by organizations. Since not only insights are gained but intelligent automation is being used to support more responsive environments.

Operational Intelligence is the New Competitive Edge

Generative AI is no longer being treated as an innovation; it is now a core part of enterprise operations—especially when strong cloud systems are combined with it. When noise reduction, faster project completion, and trusted automation are priorities, organizations have started acting now. It is not simply that generative AI in the cloud speeds up work; enterprises need this combination. By forward-thinking enterprises, potential in this field is being realized daily, and organizations should continue to look. Continuous intelligent automation can drive business benefits, and a firm should consider its use. Exploration of these opportunities is ongoing for organizations who want automation, reliability, and structured delivery.

At Blanco Infotech, we’re helping forward-thinking enterprises unlock this potential every day.